Quickstart

Step 1: Install Jan

- Download Jan

- Install the application on your system (Mac, Windows, Linux)

- Launch Jan

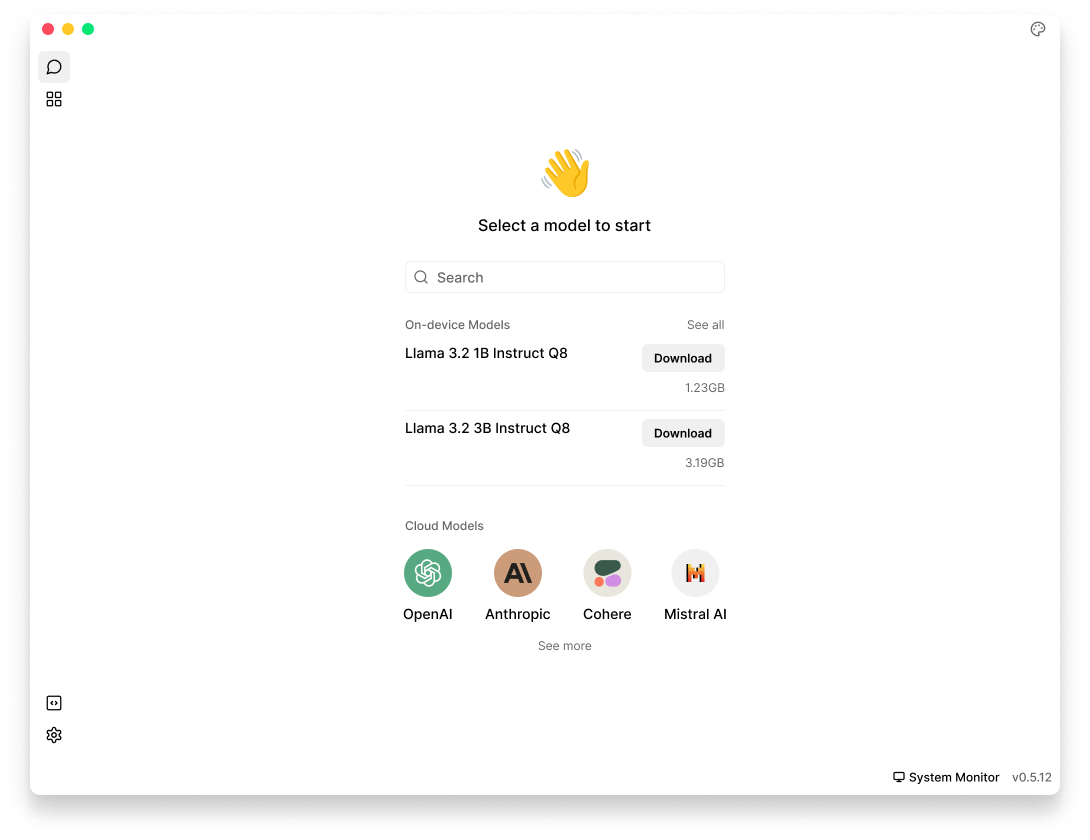

Once installed, you'll see the Jan application interface with no local models pre-installed yet. You'll be able to:

- Download and run local AI models

- Connect to cloud AI providers if desired

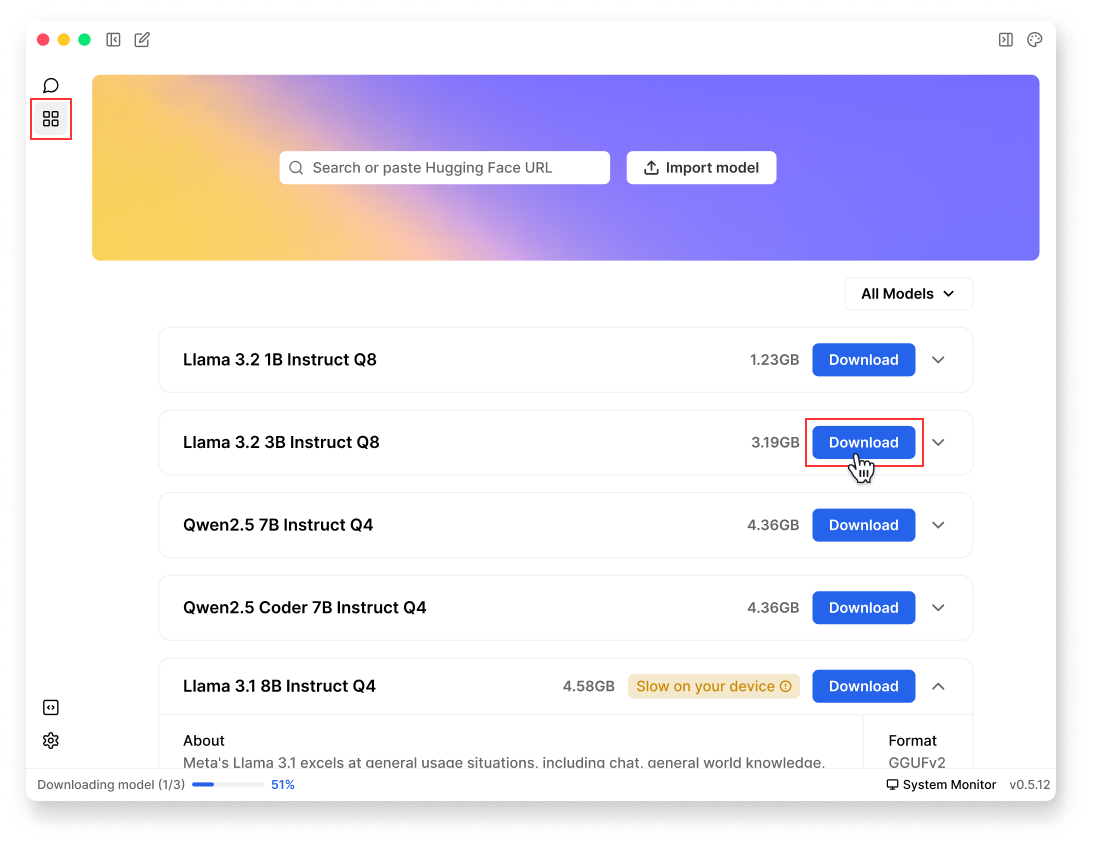

Step 2: Download a Model

Jan offers various local AI models, from smaller efficient models to larger more capable ones:

- Go to Hub

- Browse available models and click on any model to see details about it

- Choose a model that fits your needs & hardware specifications

- Click Download to begin installation

For more model installation methods, please visit Model Management.

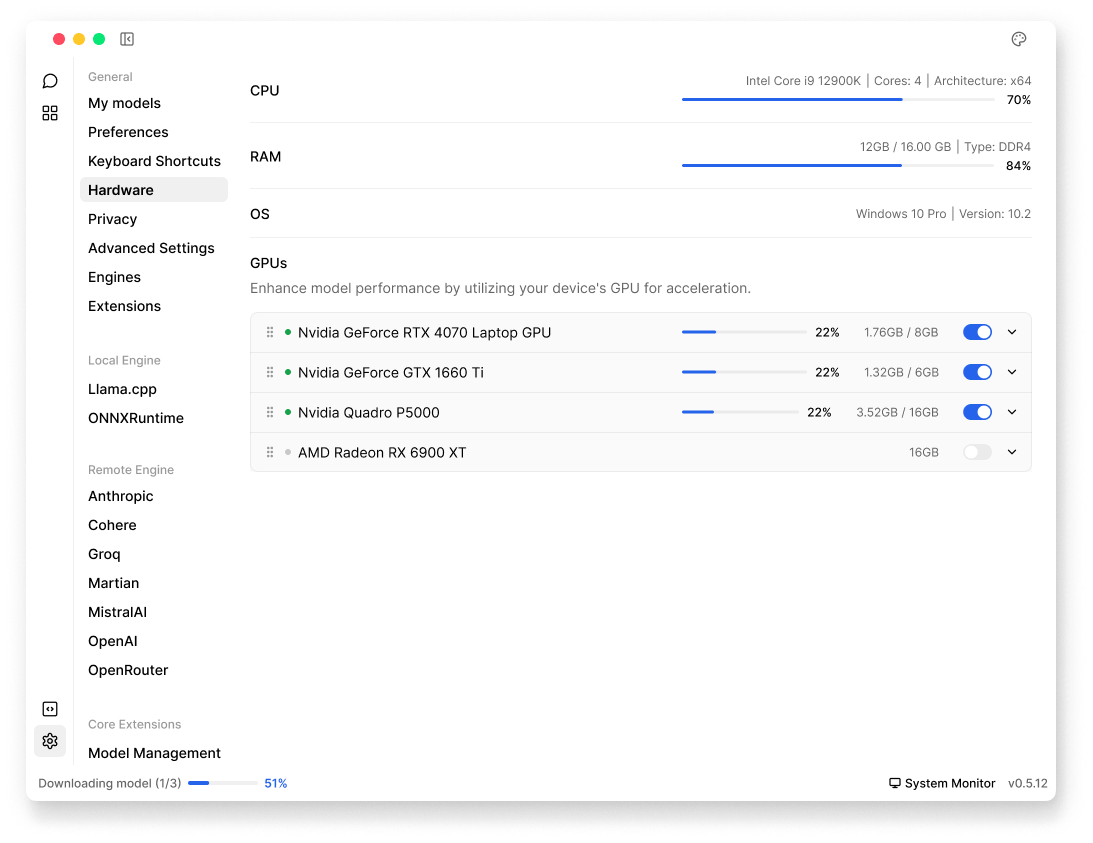

Step 3: Turn on GPU Acceleration (Optional)

While the model downloads, let's optimize your hardware setup. If you have a compatible graphics card, you can significantly boost model performance by enabling GPU acceleration.

- Navigate to Settings → Hardware

- Enable your preferred GPU(s)

- App reload is required after the selection

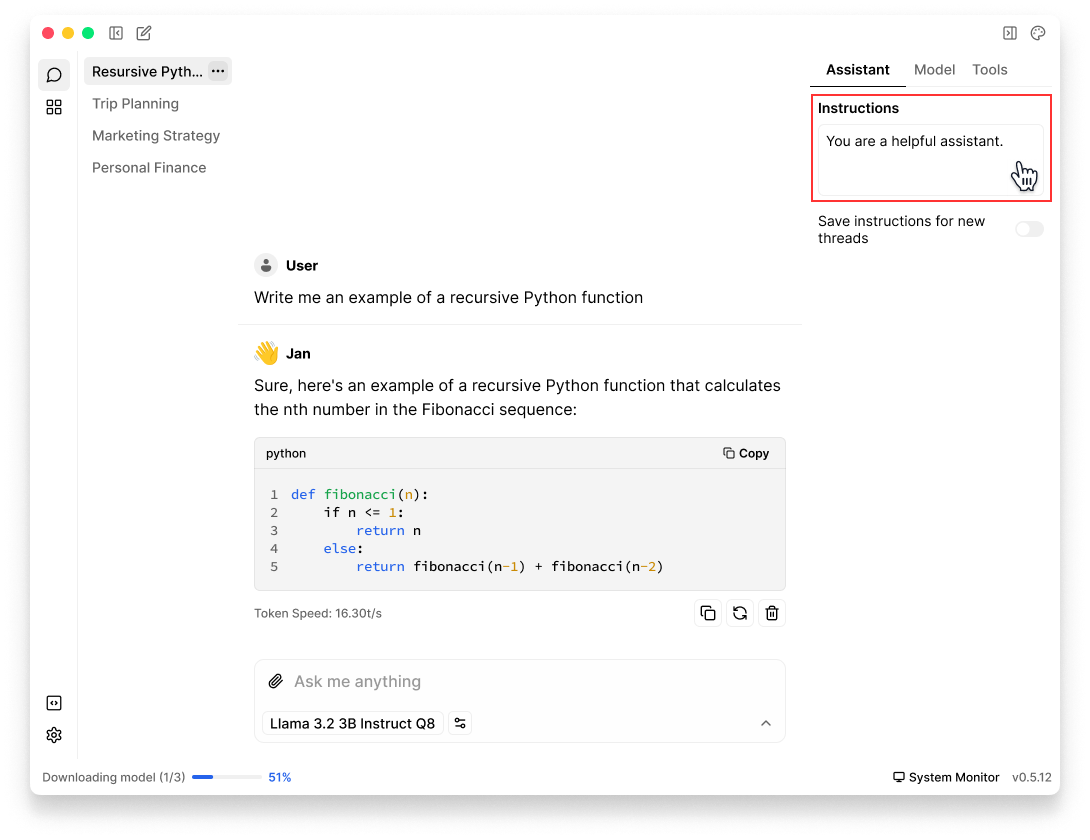

Step 4: Customize Assistant Instructions

Once your model has downloaded and you're ready to start your first conversation with Jan, you can customize how it responds by setting specific instructions:

- In any Thread, click the Assistant tab in the right panel

- Enter your instructions in the Instructions field to define how Jan should respond

You can modify these instructions at any time during your conversation to adjust Jan's behavior for that specific thread.

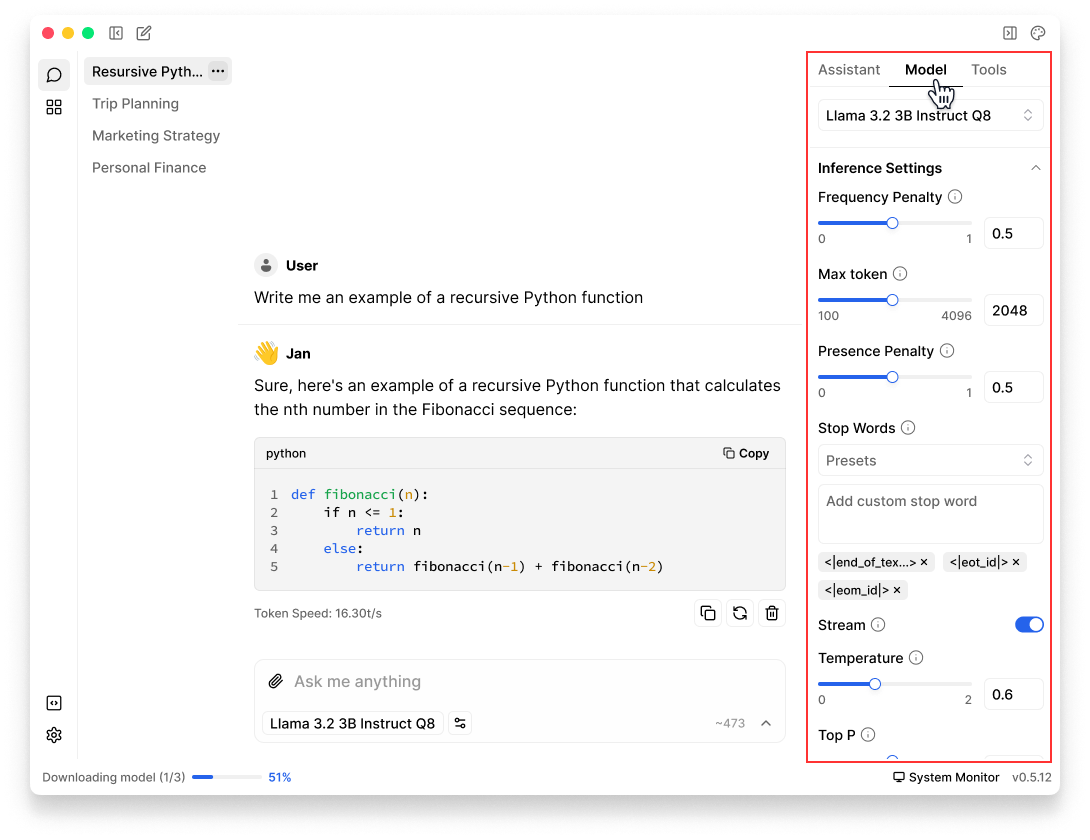

Step 5: Start Chatting and Fine-tune Settings

Now that your model is downloaded and instructions are set, you can begin chatting with Jan. Type your message in the input field at the bottom of the thread to start the conversation.

You can further customize your experience by:

- Adjusting model parameters in the Model tab in the right panel

- Trying different models for different tasks by clicking the model selector in Model tab or input field

- Creating new threads with different instructions and model configurations

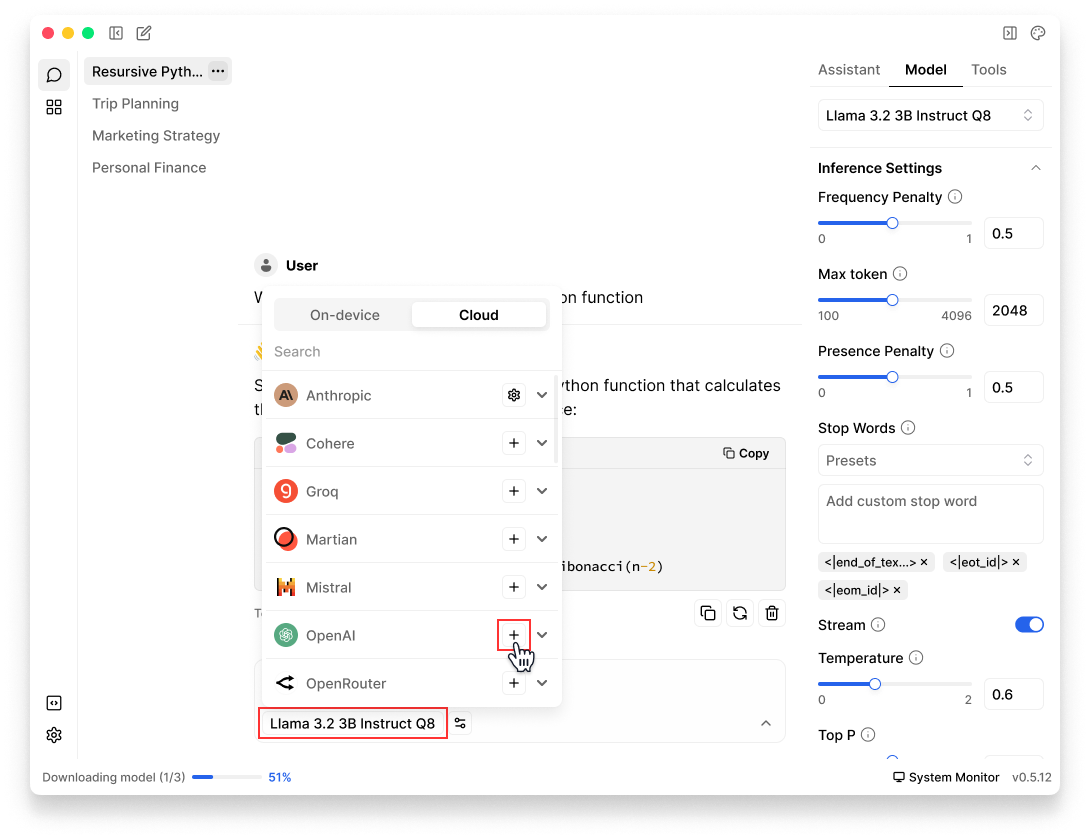

Step 6: Connect to cloud models (Optional)

Jan supports both local and remote AI models. You can connect to remote AI services that are OpenAI API-compatible, including: OpenAI (GPT-4, o1,...), Anthropic (Claude), Groq, Mistral, and more.

- Open any Thread

- Click the Model tab in the right panel or the model selector in input field

- Choose the Cloud tab

- Choose your preferred provider (Anthropic, OpenAI, etc.)

- Click the Add (➕) icon next to the provider

- Obtain a valid API key from your chosen provider, ensure the key has sufficient credits & appropriate permissions

- Copy & insert your API Key in Jan

See Remote APIs for detailed configuration.

What's Next?

Now that Jan is up and running, explore further:

- Learn how to download and manage your models.

- Customize Jan's application settings according to your preferences.